Photography Agent (Fall 2025)

The Photography Agent is a collaborative semester project focused on building a photo organization and editing system powered by an agentic large language model (LLM). The LLM interprets natural language user requests and calls specialized computer vision tools for photo filtering and editing.

LLM Agent (Primary Contribution)

- Base of Llama-3-8B-Instruct

- Fine-tuned using LoRA on 1,985 curated examples

- Trained to:

- Generate valid JSON tool-call outputs

- Select appropriate tools based on user intent

- Respond naturally when no tool invocation is required

- Explain available tools when requested

- Handle ambiguous or invalid requests appropriately

- Designed and iteratively refined a custom system prompt, where prompt engineering was crucial to improving response consistency, reducing hallucinations, and enforcing structured outputs

- Evaluated using a held-out, shuffled test set of 218 examples

- Achieved 88% overall response accuracy across tool selection and conversational tasks

System Tools (Team Contributions)

- Focus tool: return blurry or non-blurry images

- Exposure tool: return properly exposed, underexposed, or overexposed images

- Color tool: return images with warm, cool, or neutral color tones

- Album filtering tool: return images that match a specified topic

- Background blur tool: apply background blur to selected images

- Object removal tool: remove people or vehicles from selected images

Links

Demo of Photography Agent

Watch demo in new tabDiabetic Retinopathy Classification Preprocessing Evaluation (Spring 2024)

In this project, a partner and I investigated the impact of image preprocessing techniques on diabetic retinopathy (DR) severity classification using deep learning models. To discover if there is a preprocessing method that is best accross models.

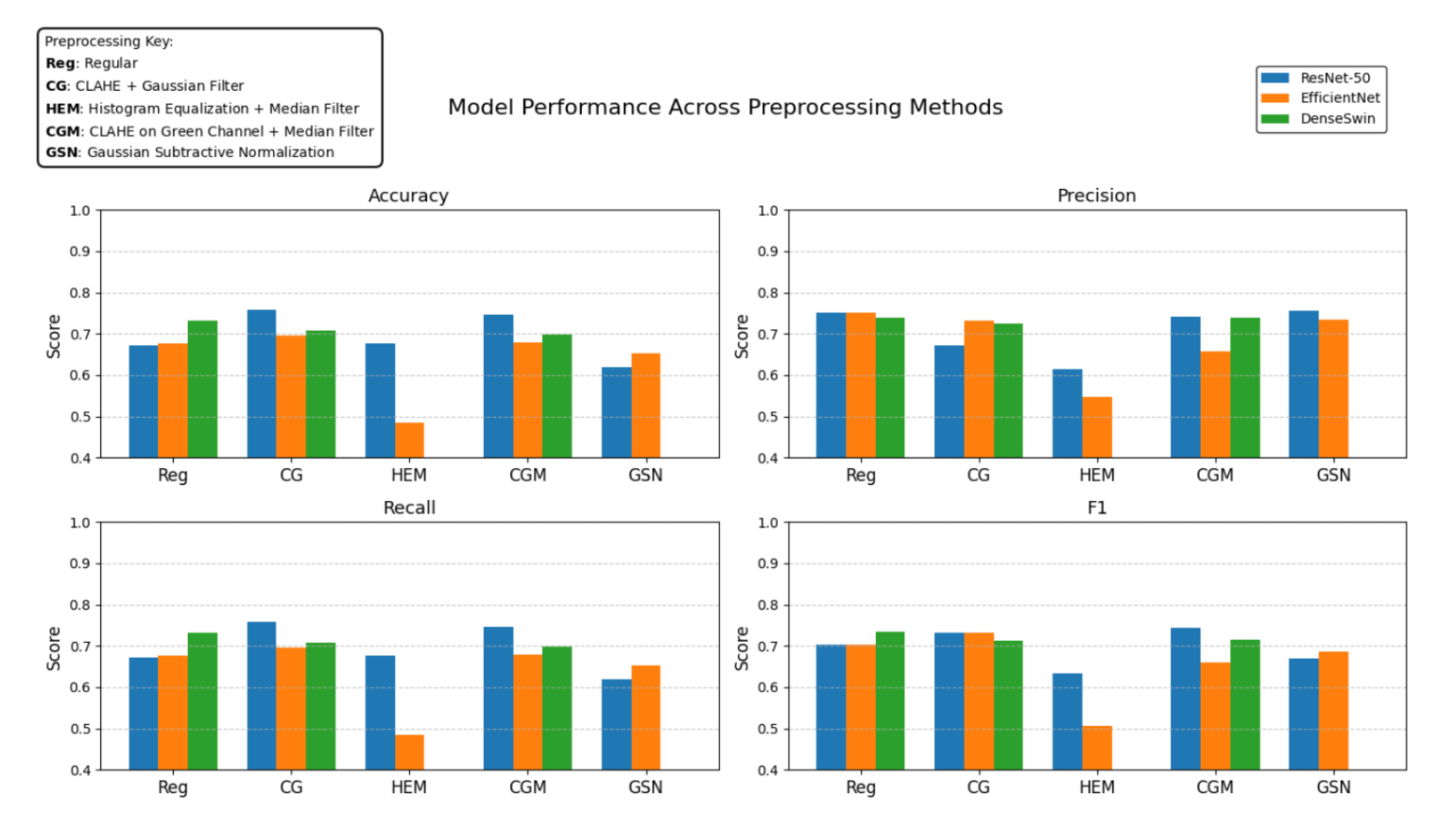

- Fine-tuned and evaluated ResNet-50, EfficientNet, and a hybrid DenseNet–Swin Transformer (DenseSwin) on a dataset created from the APTOS 2019 and EyePACS retinal fundus datasets.

- Compared five preprocessing pipelines, including CLAHE-based contrast enhancement, histogram equalization, Gaussian subtractive normalization, and a baseline. preprocessing

My Contributions

- Implemented and fine-tuned EfficientNet and DenseNet-based (DenseSwin) models.

- Implemented retinal image preprocessing pipelines, including Gaussian Subtractive Normalization, CLAHE on the green channel with median filtering, and standard preprocessing.

- Performed quantitative evaluation and result visualization, including accuracy, precision, recall, and F1 score comparisons across models and preprocessing methods.

Results

- Evaluated accuracy, precision, recall, and F1-scores of each model fine-tuned on data using each preprocessing method.

- Plot shows that preprocessing effectiveness is model-dependent, with CLAHE + Gaussian filtering improving ResNet-50 and EfficientNet performance, while the hybrid DenseSwin model performed best with standard preprocessing.

- This highlights the importance of architecture-aware preprocessing strategies for DR screening systems.

Links

Cell Type & Cancer Classification (Fall 2024)

This project applies deep learning to automated cell-type and cancer multi-classification using the CellNet medical imaging dataset. The models classify images into 19 cell types and distinguish benign cells from multiple cancer subtypes, with the goal of improving speed and reliability in medical image analysis.

- Fine-tuned and evaluated 4 models(ResNet, EfficientNet, Multilayer Perceptron (MLP), and Swin transformer) using the CellNet dataset

- Evaluated models using test accuracy, weighted F1-score, training computation time, and confusion matrices

Swin Transformer (Primary Contribution)

- Implemented a hierarchical Swin Transformer for large-scale medical image classification

- Used shifted-window self-attention for efficient, scalable training

- Explored architectural trade-offs to reduce compute without sacrificing accuracy

- Reduced embedding dimension and attention heads to significantly improve runtime

- Performed grid search over learning rate, batch size, and MLP ratio

- Final configuration achieved:

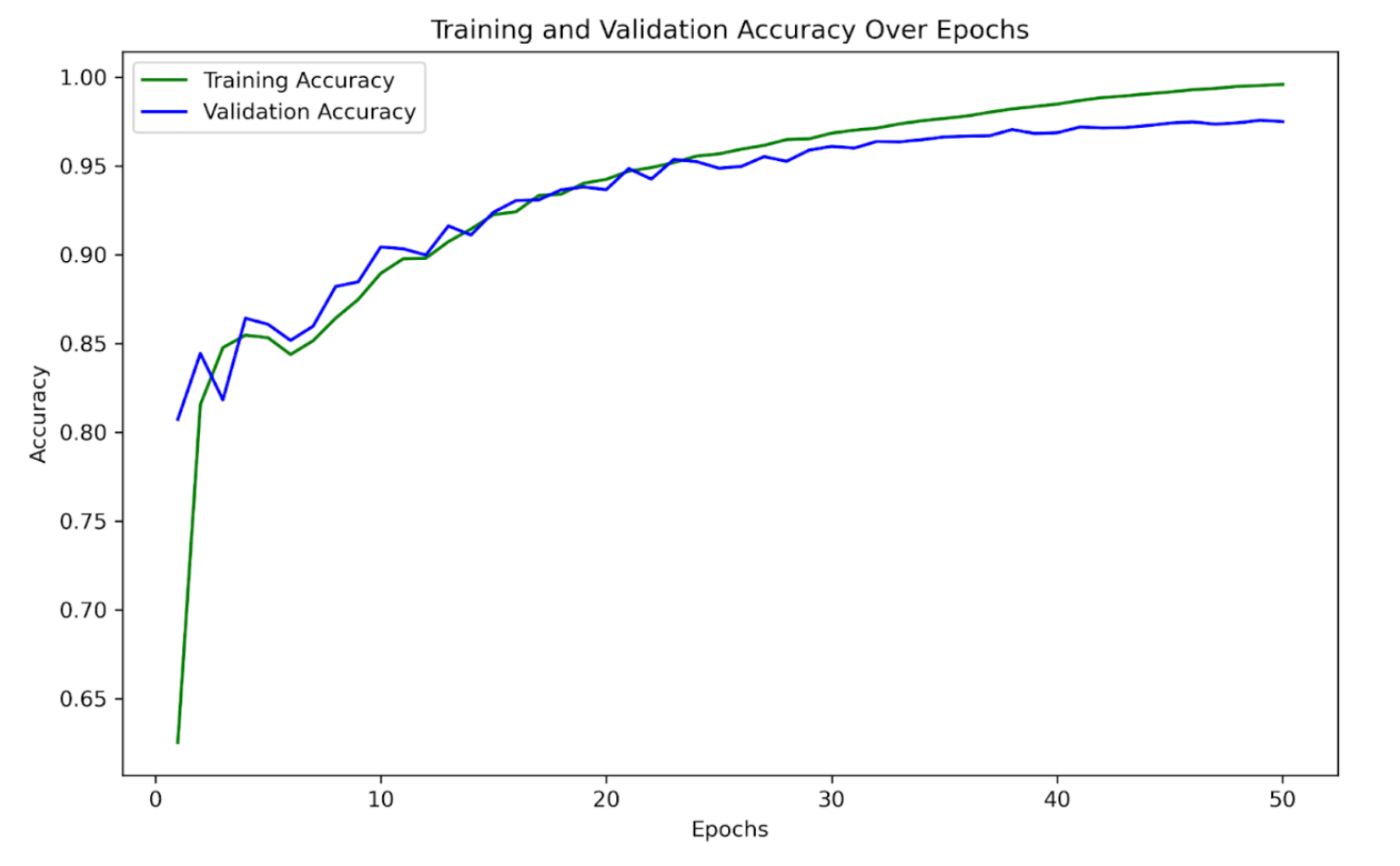

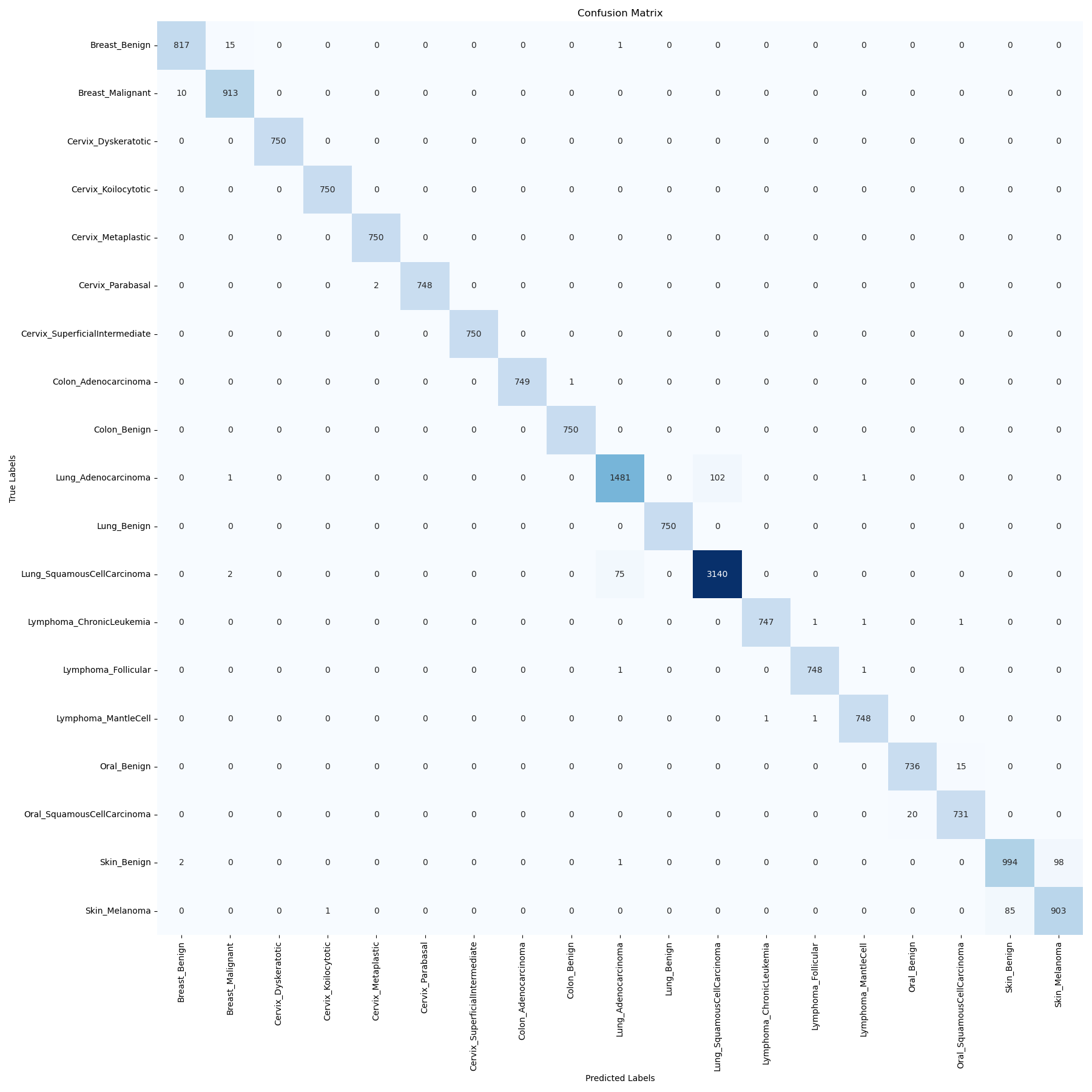

- Test Accuracy: 97.6%

- Weighted F1 Score: 0.976

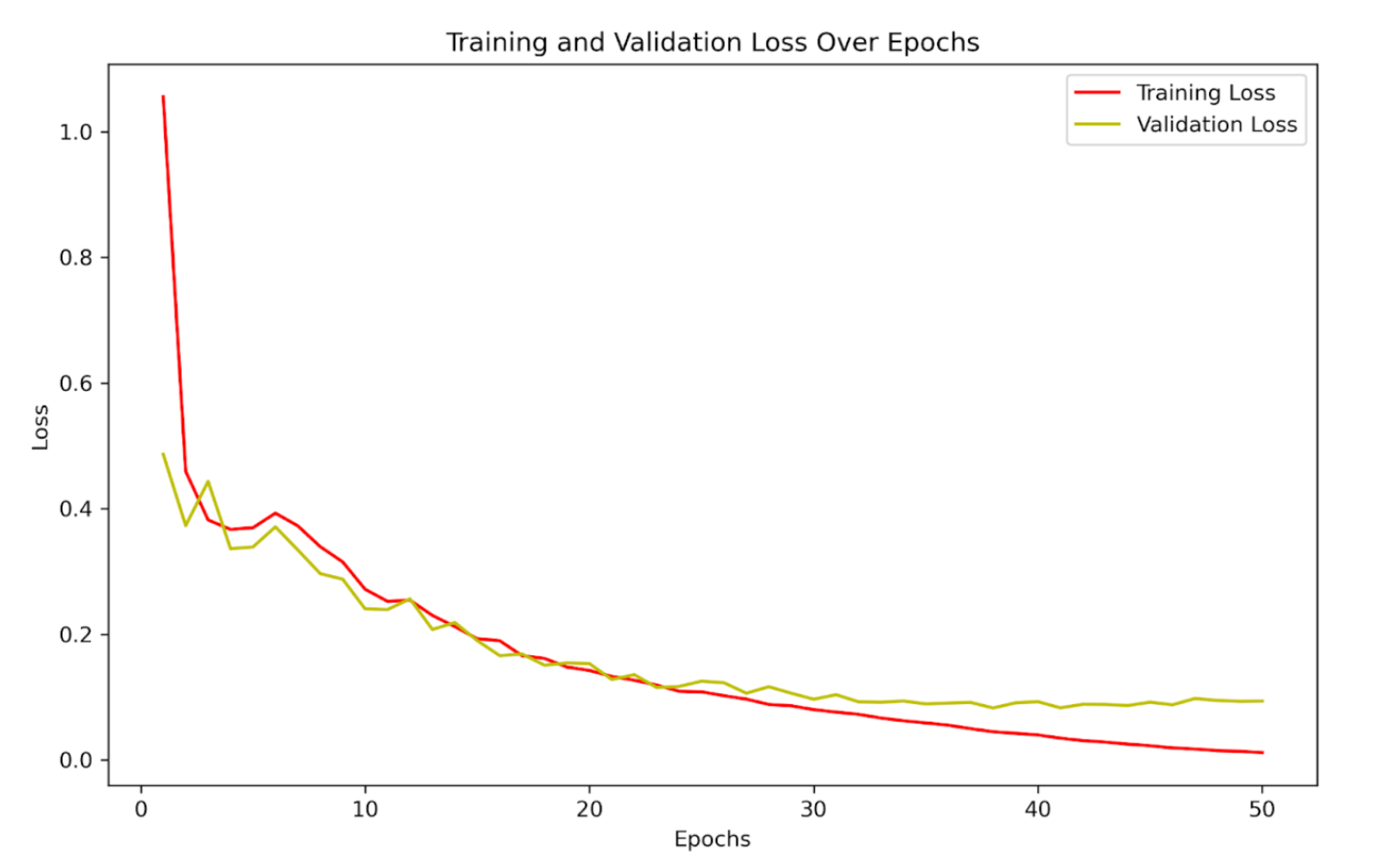

- Training and validation accuracy increased steadily, indicating effective learning.

- Training and validation loss consistently decreased throughout training.

- Close alignment between training and validation curves suggests strong generalization.

- Overall performance indicates the model achieves high accuracy without overfitting.

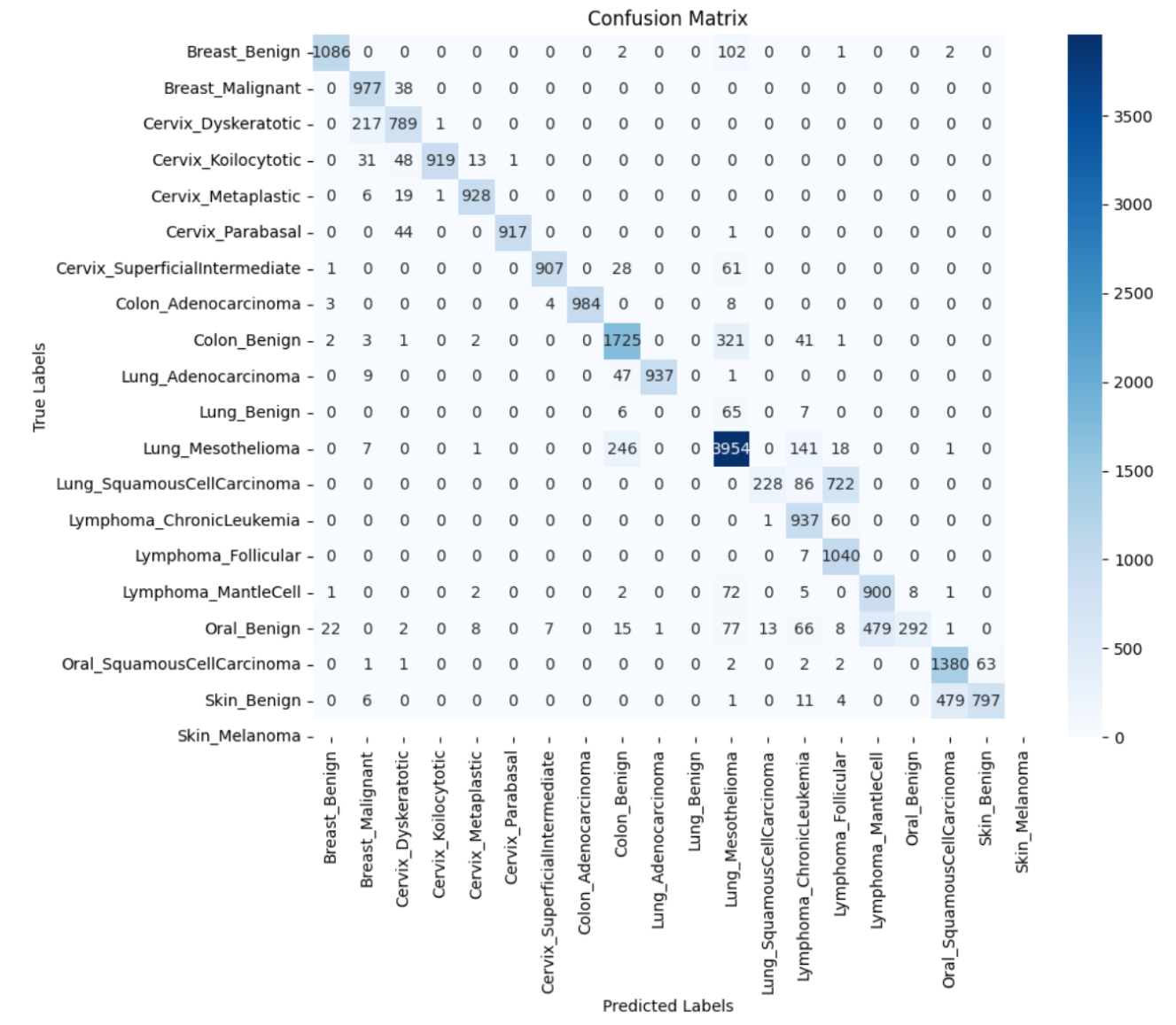

- The confusion matrix shows strong class-wise performance, further supporting the model’s robustness.

Baseline Models (Team Contributions)

- ResNet50: Served as a strong convolutional baseline, achieving 83.9% test accuracy and a weighted F1 score of 0.826.

- EfficientNet: Reached moderate test accuracy but exhibited severe class imbalance, resulting in a very low weighted F1 score.

- Multilayer Perceptron (MLP): Functioned as a non-convolutional baseline and struggled to scale to high-dimensional, multi-class image data.

View detailed results for baseline models (ResNet, EfficientNet, MLP)

The following models were evaluated during development but underperformed relative to the Swin Transformer in terms of weighted F1 score and convergence.

EfficientNet

- Achieved a test accuracy of 80.2%, but a very low weighted F1 score of 0.069, indicating strong class imbalance effects and poor per-class performance.

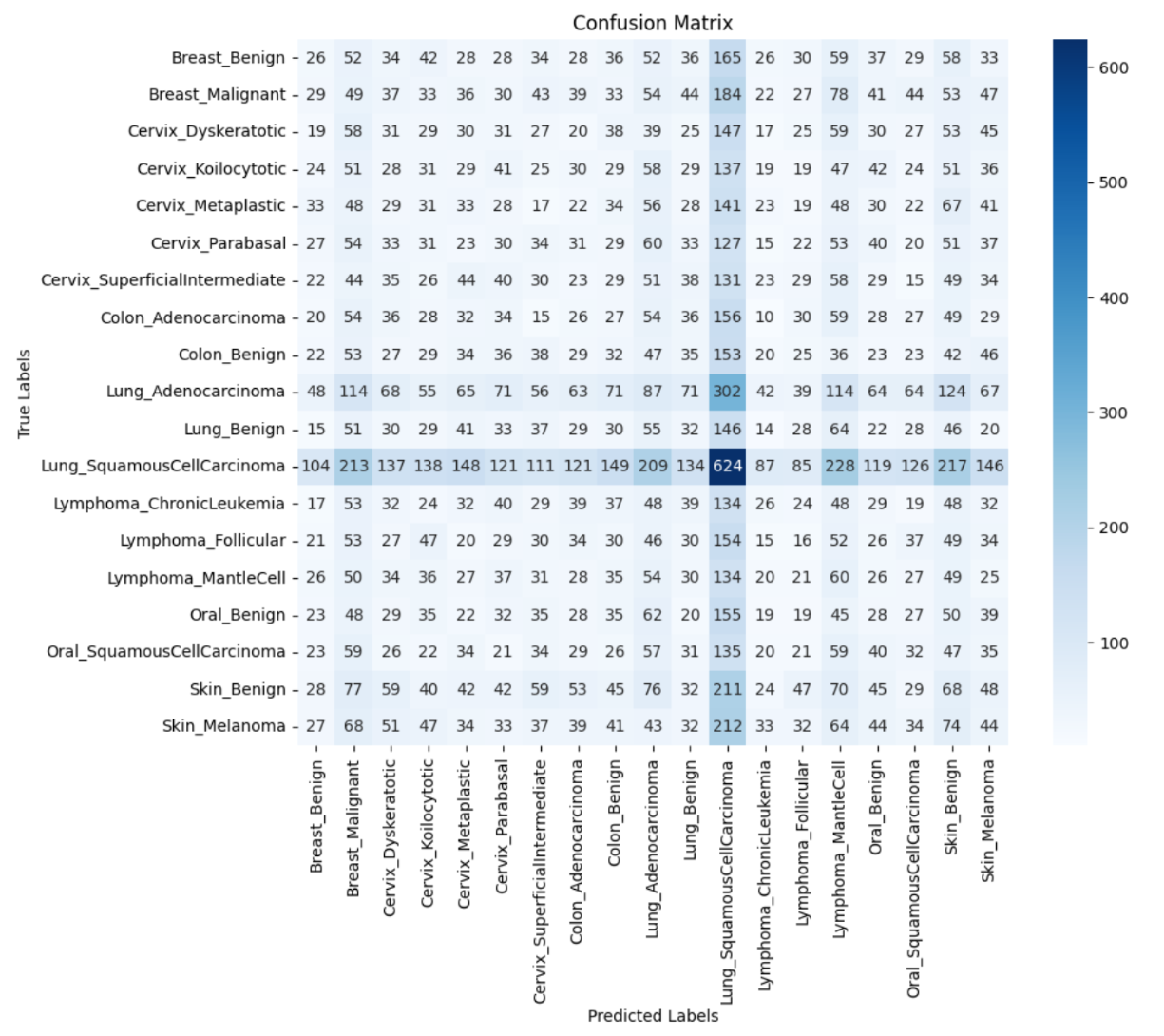

- The confusion matrix reveals extensive misclassification across classes, including a strong bias toward the dominant class as well as a high number of false negatives within the majority class itself, indicating poor class separation overall.

Multilayer Perceptron

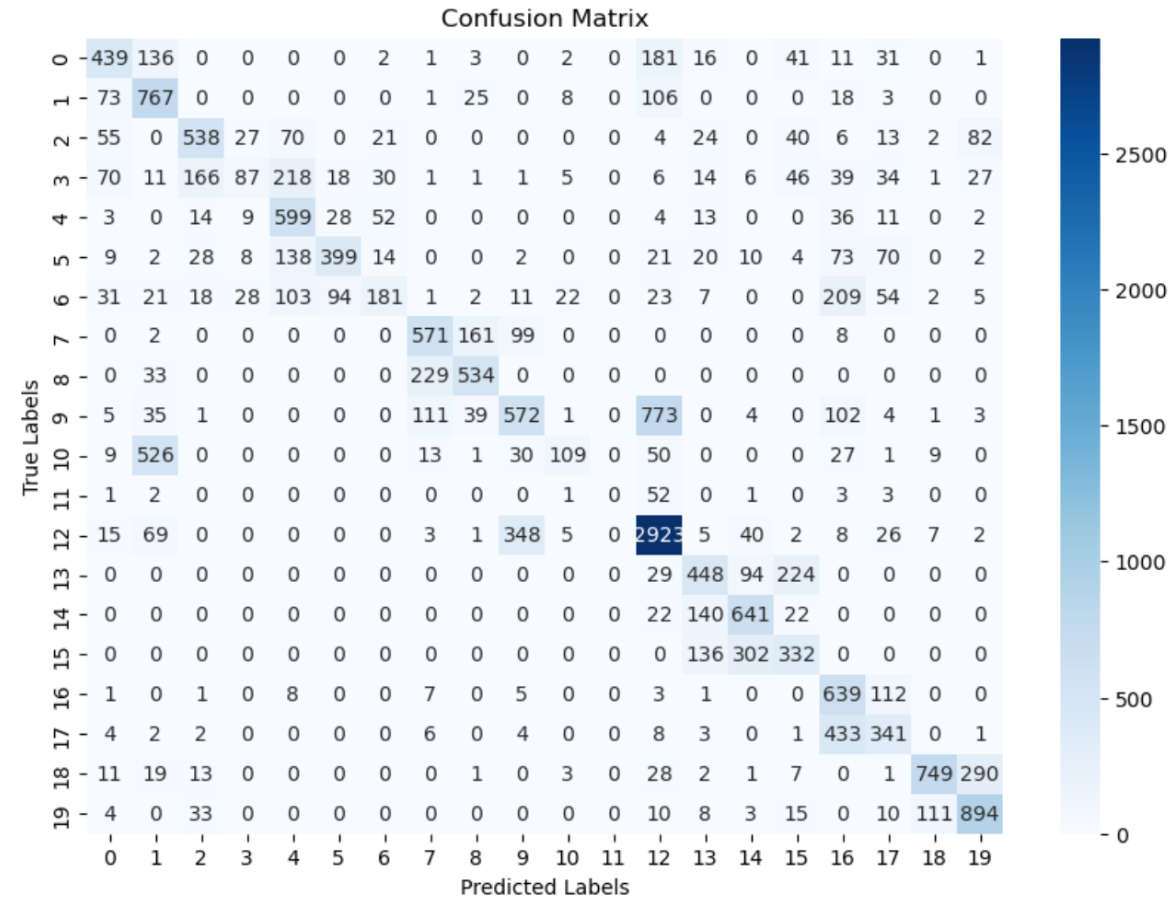

- Achieved a test accuracy of 49.0% with a weighted F1 score of 0.576, indicating limited overall performance but improved class-level balance compared to EfficientNet.

- The confusion matrix shows greater concentration along the diagonal than EfficientNet, suggesting better handling of class imbalance, though a substantial number of false positives and false negatives remain.

ResNet50

- Achieved a test accuracy of 83.9% and a weighted F1 score of 0.826, demonstrating improved performance over EfficientNet and MLP.

- The confusion matrix exhibits strong diagonal alignment, with most errors concentrated between visually similar classes (e.g., skin_benign vs. skin_melanoma), suggesting remaining challenges in fine-grained visual discrimination.

Conclusion

- Swin Transformer achieved the best overall performance, significantly outperforming all baseline models.

- Hierarchical attention enabled effective modeling of complex and visually similar cell types.

- Weighted F1 score confirmed consistent performance across imbalanced cancer classes.

- The findings support Swin Transformers as a compelling architecture for medical imaging applications.

Overall, this project demonstrates the advantages of transformer-based architectures over traditional convolutional and fully connected models for large-scale medical image classification.